To continue learning about the enhancements in vSphere 7, this time we will see what is related to Storage

vSphere supports various storage options and devices to give us the flexibility to set up the storage solution that integrates with our environment. Using vSphere storage and its enhanced features can help meet the demands of our workloads and improve the overall management experience of our virtual data center.

Support for iSER-Enabled vSphere Virtual Volumes Arrays

iSCSI Extension for RDMA (iSER) provides high-performance iSCSI connectivity for standard RDMA NICs. In addition to supporting traditional iSCSI, ESXi supports the iSER protocol. When the iSER protocol is enabled, the iSCSI framework on the ESXi host can use the RDMA transport instead of TCP/IP. vSphere 7 also adds iSER support for vSphere Virtual Volumes.

In vSphere 7, support for iSER requires the following:

• RoCE-enabled devices

• A target array that supports iSER on RoCE

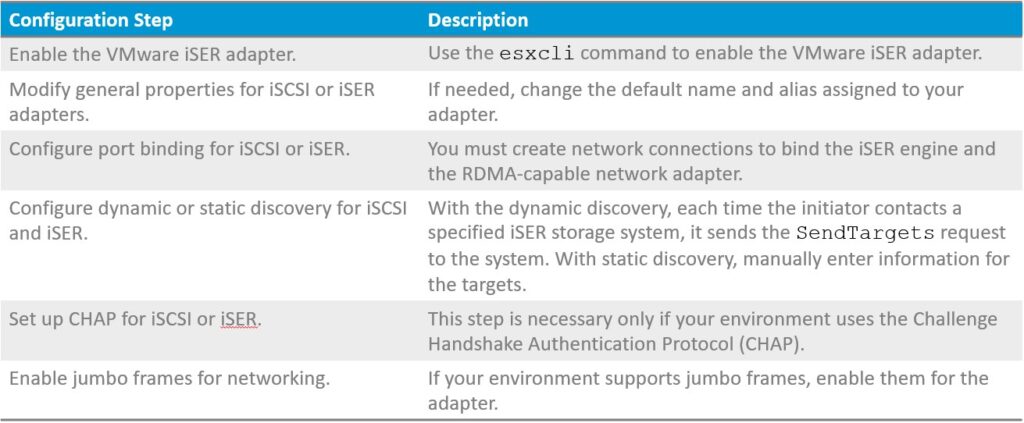

Configuring iSER

Configure iSER on the ESXi host so that the iSCSI framework on the host can use Remote Direct Memory Access (RDMA) transport instead of TCP/IP.

When installed on the host, the RDMA-capable adapter appears in vCenter Server as a network adapter (vmnic). To make the adapter functional, we must enable the VMware iSER component and connect the iSER adapter to the RDMA-capable vmnic. We can then configure properties, such as targets and CHAP, for the iSER adapter.

NOTE: iSER does not support NIC teaming. When configuring port binding, use only one RDMA adapter per virtual switch.

Requirements for iSER

To enable the VMware iSER adapter, use the esxcli command and ensure that you meet the following requirements:

• Verify that your iSCSI storage supports the iSER protocol.

• Install the RDMA-capable adapter on your ESXi host.

• Use the RDMA-capable switch.

• Enable flow control on the ESXi host. To enable flow control for the host, use the esxcli system module parameters command.

• Configure RDMA switch ports to create lossless connections between the iSER initiator and target. To enable flow control, see VMware knowledge base article 1013413

Enhanced Support for NVMe

Non-Volatile Memory Express (NVMe) is a standardized protocol for high-performance multiqueue communication with NVM devices. ESXi supports the NVMe protocol to connect to local and networked storage devices.

vSphere 7 introduces the following features to enhance NVMe support:

• Pluggable Storage Architecture (PSA) stack using a High-Performance Plug-in (HPP) with multipathing

• Hot-add and hot-remove of local NVM devices, if the server system supports it

• Support for devices that use Remote Direct Memory Access (RDMA) or Fibre Channel

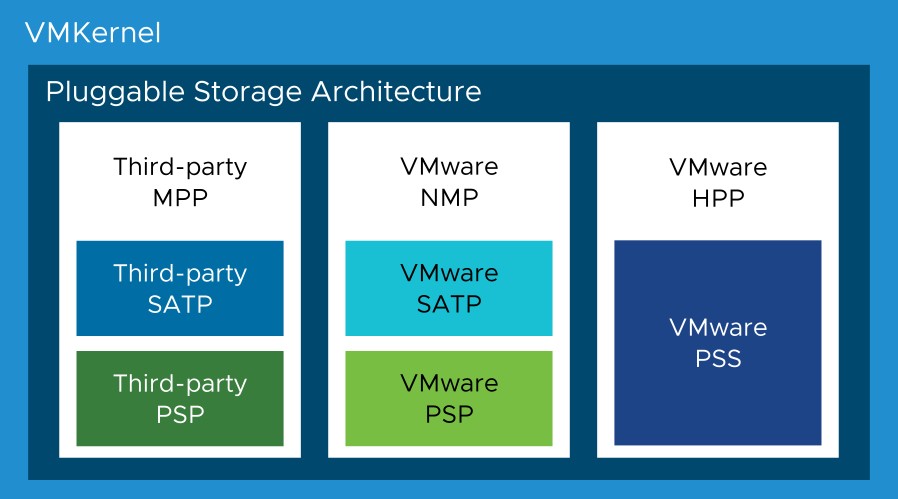

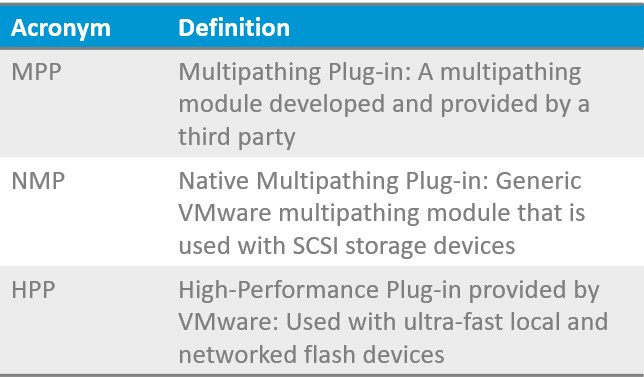

ESXi uses Pluggable Storage Architecture (PSA) as a VMkernel layer for managing storage multipathing. The PSA is an open and modular framework. It coordinates software modules that are responsible for multipathing operations. Modules include generic multipathing modules (MPPs) that VMware provides, Native Multipathing Plug-in (NMP) and High-Performance Plug-in (HPP), and third-party MPPs.

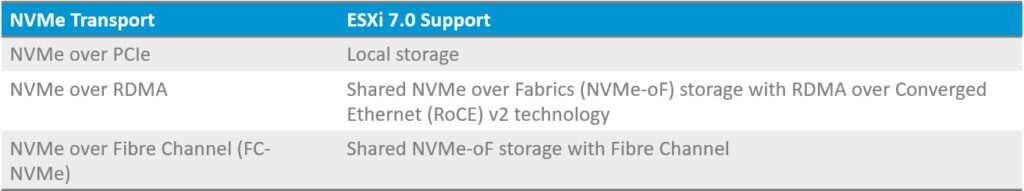

Supported NVMe Topologies

In vSphere 7, the NVMe storage can be directly attached to a host using a Peripheral Component Interconnect Express (PCIe) interface or indirectly through different fabric transports.

ESXi 7.0 supports the NVMe protocol to connect to local and networked storage devices. For local devices, we can use a PCIe interface. For devices on a shared storage array, use NVMe over Fabrics (NVMe-oF) For shared NVMe storage, we can use NVMe over Fibre Channel (FC-NVMe) or NVMe over RDMA with the RoCE v2 technology.

FC-NVMe and NVMe over RDMS (RoCE v2)

vSphere 7 supports remote access to shared NVMe storage, such as NVMe over Fibre Channel (FC-NVMe) and NVMe over RDMA with RoCE v2 technology.

For more details about VMware NVMe concepts, see vSphere Storage here

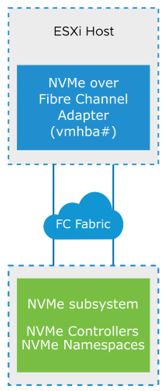

Support for FC-NVMe FC-NVMe is a technology that maps NVMe onto the Fibre Channel protocol. With FC-NVMe, data and commands can be transferred between a host computer and a target storage device. To access the FC-NVMe storage, we must install a Fibre Channel storage adapter that supports NVMe on our ESXi host. We do not need to configure the controller. After we install the required hardware NVMe adapter, it automatically connects to all targets and controllers that are reachable. Our ESXi host detects the device and displays it in the vSphere Client as a standard Fibre Channel adapter (vmhba) with the storage protocol indicated as NVMe. We do not need to configure the controller. After we install the required hardware NVMe adapter, it automatically connects to all targets and controllers that it can reach. We can later reconfigure the adapter and disconnect its controllers or connect other controllers that were not available during the host boot.

Requirements for FC-NVMe

When we use FC-NVMe, the following components are required:

• Fibre Channel storage array that supports NVMe.

• NVMe controller on your storage array.

• ESXi 7.0 or later.

• Hardware NVMe adapter on your ESXi host: This adapter is typically a Fibre Channel host bus adapter (HBA) that supports NVMe.

Configuration maximums for NVMe-oF:

• 32 namespaces

• 128 paths

• 4 paths per namespace per host

Support for NVMe over RDMA (RoCE v2)

The NVMe over RDMA (RoCE v2) technology uses RDMA for transport between two systems on the network. Data exchange occurs in the main memory, bypassing the operating system and processor of both systems. ESXi 7.0 supports RoCE v2 technology, which enables RDMA over an Ethernet network. To access storage, the ESXi host uses an RDMA network adapter installed on our host and a software NVMe over RDMA storage adapter. NVMe over RDMA with RoCE v2 technology provides ultra-efficient storage to optimize performance-sensitive workloads over our existing Ethernet fabric. You must configure both adapters to use them for storage discovery. The adapter configuration process involves setting up VMkernel binding for an RDMA network adapter and enabling a software NVMe over RDMA adapter.

Requirements for NVMe over RDMA (RoCE v2)

NVMe over RDMA (RoCE v2) requires the following components:

• NVMe storage array with NVMe over RDMA (RoCE v2) transport support

• NVMe controller on your storage array

• ESXi 7.0 or later

• Ethernet switches supporting a lossless network

• Network adapter on your ESXi host that supports RoCE v2

• Software NVMe over RDMA adapter enabled on your ESXi hosts

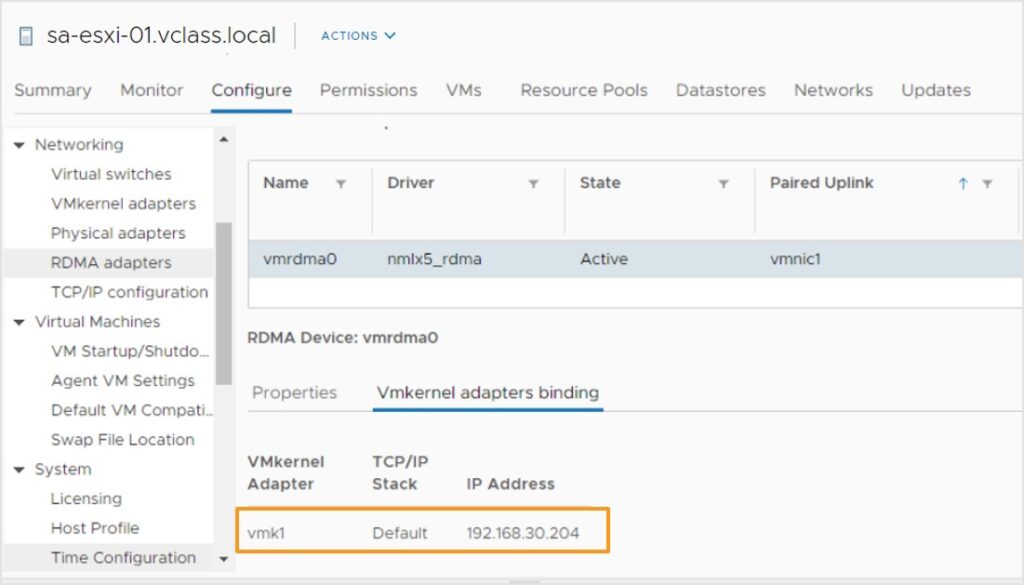

Configuring ESXi for NVMe over RDMA (RoCE v2)

To configure your ESXi host to use NVMe over RDMA (RoCE v2):

1. On your ESXi host, install an adapter that supports RDMA (RoCE v2).

2. In the vSphere Client, verify that the RDMA adapter is discovered by your host.

3. Configure VMkernel binding for the RDMA adapter.

4. Verify the VMkernel binding configuration for the RDMA adapter.

5. Enable the software NVMe over RDMA adapter.

6. Discover NVMe controllers on your array (automatic or manual).

7. Rescan storage to discover NVMe namespaces.

8. Create your VMFS datastores.

Identify the physical network adapter that corresponds to the RDMA adapter.

Configure the VMkernel binding for the RDMA adapter.

Select Add software NVMe over RDMA adapter and select an appropriate RDMA adapter (vmrdma) from the drop-down menu.

The software NVMe over RDMA adapter appears on the list as a vmhba storage adapter. We can remove the adapter if we must free the underlying RDMA network adapter for other purposes.

Pluggable Storage Architecture

Pluggable Storage Architecture (PSA) is an open and modular framework that coordinates various software modules that are responsible for multipathing operations.

VMware provides generic native multipathing modules, called VMware NMP and VMware HPP. In addition, the PSA offers a collection of VMkernel APIs that third-party developers can use. Software developers can create their own load-balancing and failover modules for a particular storage array. These third-party multipathing modules (MPPs) can be installed on the ESXi host and run in addition to the VMware native modules, or as their replacement.

Multiple third-party MPPs can run in parallel with the VMware NMP or HPP. When installed, the third-party MPPs can replace the behavior of the native modules.

The PSA software modules use different mechanisms to handle the path selection and failover.

High-Performance Plug-In for NVMe

In vSphere 7, the HPP performs the following functions:

• Acts as the default plug-in that claims NVMe-oF targets

• Supports only active-active and implicit Asymmetric Logical Unit Access (ALUA) targets

• Improves the performance of ultra-fast flash devices installed on ESXi

• Can replace the Native Multipathing Plug-in for local NVMe devices

• Supports multipathing for NVMe-oF

In vSphere 7, HPP integrates path selection and failover logic within a single module, reducing cross-module calls. To support multipathing, HPP uses a Path Selection Scheme (PSS) when selecting physical paths for I/O requests. We can use vSphere ESXi Shell or the vSphere CLI to configure the HPP and PSS. For local NVMe devices, NMP remains the default plug-in, but we can replace it with HPP.

Support for High-Performance Plug-In Multipathing

The HPP improves the performance of NVMe devices on your ESXi host

High-Performance Plug-In Best Practices

To achieve the fastest throughput from a high-speed storage device, follow these recommendations:

• Use the HPP for NVMe local or networked devices.

• Do not activate the HPP for HDDs or slower flash devices.

• When using FC-NVMe, follow general recommendations for Fibre Channel storage.

• When using NVMe-oF, do not mix transport types to access the same namespace.

• Before registering NVMe-oF namespaces, ensure that active paths are presented to the host.

• Configure your VMs to use VMware Paravirtual controllers.

• Configure your VMs to use high-latency sensitivity.

• If a single VM drives a significant share of the device’s I/O workload, consider spreading the I/O across multiple virtual disks. Attach the disks to separate virtual controllers in the VM. Do not activate the HPP for HDDs or slower flash devices. The HPP does not provide any performance benefits with devices incapable of at least 200,000 IOPS. If we do not attach the disks to separate virtual controllers in the VM, I/O throughput might be limited because of saturation of the CPU core that is responsible for processing I/O operations on a virtual storage controller.

Closing Note

I hope it has been useful to you. The official documentation can be found here. In the next post, we will see the vSAN enhancements!